Problem¶

In my previous chameleon_stable_diffusion tool, it will try to connect to the server at the first time the interface is opened, to pull the available AI model names and display them on the interface. When the network and server are normal, the UI opens smoothly (0.1~0.2 seconds), but when the server is abnormal, the experience becomes very bad: the entire editor will be stuck for several seconds until python throws a network connection timeout exception message.

If you make a Chameleon tool that needs to read a lot of data when it opens, or needs to make network requests, you are likely to encounter similar problems. This blog and the example below will help you solve this problem.

Brief Steps¶

- Create a

ChameleonTaskExecutorinstance in the__init__function of the tool instance to submit asynchronous tasks - After creating the tool instance in the

InitPyCmdof the Chameleon tool's json, executesome_slow_tasksto simulate time-consuming tasks - In

some_slow_tasks, submit two asynchronous tasks to theexecutorand specify the callback function when the task is completed - In the callback function, get the return value of the task through

future_idand update the interface

This example can be found in TAPython_DefaultResources and will be included in the subsequent versions of TAPython

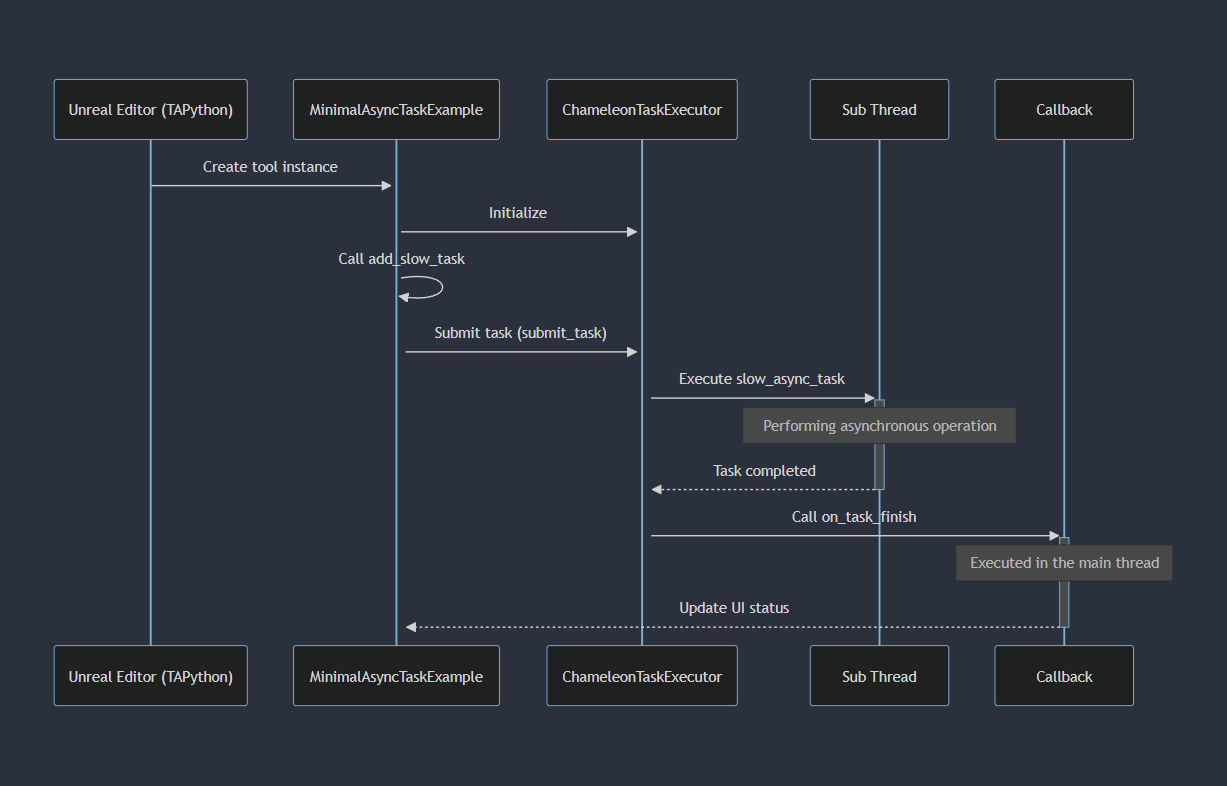

Sequence Diagram:

Specific Introduction¶

Prerequisites¶

The Unreal Engine enforces restrictions on accessing Slate widgets from threads other than the main game thread.

NOTE

SlateGlobals.h: #define SLATE_CROSS_THREAD_CHECK() checkf(IsInGameThread() || IsInSlateThread(), TEXT("Access to Slate is restricted to the GameThread or the SlateLoadingThread!"));

As a result of this restriction, our current goal is to:

- Execute time-consuming tasks in non-main threads

- Update Slate widgets in the main thread through callback functions after completing time-consuming tasks

In this example MinimalAsyncTaskExample, when we open the UI, two "Slow Tasks" will be executed to simulate reading files or network requests. When the task is completed, our UI will be updated, and the result will be displayed.

Let's see how it's done:

1. Call "Slow task" when starting¶

{

"TabLabel": "Chameleon Async Example",

"InitTabSize": [350, 186],

"InitTabPosition": [800, 100],

"InitPyCmd": "import Example; chameleon_mini_async_example = Example.MinimalAsyncTaskExample.MinimalAsyncTaskExample(%JsonPath); chameleon_mini_async_example.some_slow_tasks()",

"Root":{

...

}

}

Here you can see that after the regular tool startup code chameleon_mini_async_example = Example.MinimalAsyncTaskExample.MinimalAsyncTaskExample(%JsonPath), we execute chameleon_mini_async_example.some_slow_tasks().

Note that we are calling some_slow_tasks() through the tool's instance variable chameleon_mini_async_example in the json file, rather than executing it in the tool's constructor __init__. This ensures that when the subsequent code some_slow_tasks is executed, the tool and interface have been created and can be accessed through global variables.

NOTE

As we use the singleton pattern, the Chameleon tool will only execute the __init__ method once when the tool is opened multiple times. If we reload the module in InitCmd using importlib.reload, the Chameleon tool will re-execute the __init__ method.

Of course, we can also submit asynchronous tasks at other stages of tool execution. For example, after clicking a button, send a network request (such as Stable-diffusion) and other slow tasks. The button in this example simulates this situation.

2. Submit asynchronous tasks¶

In some_slow_tasks, we added two asynchronous tasks self.slow_async_task, and passed in different parameters in the two calls. After these two tasks are completed, the callback function self.on_task_finish will be called respectively.

def some_slow_tasks(self):

self.show_busy_icon(True)

self.executor.submit_task(self.slow_async_task, args=[2], on_finish_callback=self.on_task_finish)

self.executor.submit_task(self.slow_async_task, args=[3], on_finish_callback=self.on_task_finish)

TIP

Different asynchronous tasks are executed in an indeterminate order

Here, we use a executor to submit asynchronous tasks. It is responsible for managing the execution of asynchronous tasks and executing callback functions when tasks are completed.

In the specific implementation, we use ThreadPoolExecutor in the concurrent.futures library to submit and execute asynchronous tasks.

def submit_task(self, task:Callable, args=None, kwargs=None, on_finish_callback: Union[Callable, str] = None)-> int:

The submit_task function can pass in a task function task, as well as the parameters args and kwargs of the task function. We can also pass in a callback function on_finish_callback, which will be called when the task is completed.

or_finish_callback can be a function or a string. When this function has parameters, we will pass the task future_id value as the first parameter to the callback function

CAUTION

Lambda functions cannot be passed as callback functions, because the context of the Lambda function is lost when the asynchronous task is executed.

You may notice that we did not pass other parameters to on_finish_callback. In most cases, we can distinguish different tasks by future_id to get the return value of the task without additional parameters.

And of course, if you must pass other parameters, you can use a function closure to generate a new function, or use a string version of the callback function.

self.executor.submit_task(self.slow_async_task, args=[3]

, on_finish_callback="chameleon_mini_async_example.some_callback(%, other_param='task3')")

TIP

"%" will be replaced by the future_id value of the task

3. Execution of asynchronous tasks¶

This part of the content is transparent to the user, we only need to pay attention to the submission of tasks and the implementation of callback functions. If you do not need to understand the internal implementation details, you can ignore this part of the content.

This example uses ThreadPoolExecutor to execute asynchronous tasks. ThreadPoolExecutor is a thread pool that can manage multiple threads and create new threads when needed. Document: https://docs.python.org/3/library/concurrent.futures.html#concurrent.futures.ThreadPoolExecutor

In the submit_task function, when adding a callback function future.add_done_callback(_func) to the Future, we create a new temporary function _func through a closure, which will execute the string version of the callback function passed in submit_task through unreal.PythonBPLib.exec_python_command(cmd, force_game_thread=True). This part of the implementation is only available in Unreal Engine.

...

def _func(_future):

unreal.PythonBPLib.exec_python_command(cmd, force_game_thread=True)

future.add_done_callback(_func)

...

Here, self.on_task_finish will be converted to "chameleon_mini_async_example.on_task_finish" and executed in the main thread through exec_python_command. If the passed function is a function of another module or a static function, it needs to be accessible in the global scope.

If you are interested in how to convert a callable type callback function to a string version of the callback function, you can check the get_cmd_str_from_callable function in ChameleonTaskExecutor.py.

TIP

Besides ThreadPoolExecutor, other thread pools or process pools can also be used. As long as the callback function can be executed correctly when the task is completed.

4. Execute callback when task is completed¶

Considering the restrictions mentioned earlier, we cannot directly access Slate widgets in asynchronous tasks. Therefore, we put the operation of modifying the interface in the callback function, so that we can safely modify the Slate widget in the main thread.

def on_task_finish(self, future_id:int):

# This function will be called in the main thread. so it's safe to modify Slate widget here.

future = self.executor.get_future(future_id)

if future is None:

unreal.log_warning(f"Can't find future: {future_id}")

else:

self.data.set_text(self.ui_text_block, f"Task done, result: {future.result()}")

if not self.executor.is_any_task_running():

self.show_busy_icon(False)

print(f"on_task_finish. Future: {future_id}, result: {future.result() if future else 'invalid'}")

Tips¶

Which tasks should be classified as asynchronous Slow Task¶

- Big file, data reading

- Network request

- Other operations on non-UE assets

Tasks that should not be placed in asynchronous Slow Task¶

- Operations on UE assets

- Manipulation of Slate widgets

Which code should be placed in the callback¶

- The notification of task completion

- The direct modification of the Slate widget